Evaluating Context Strategies for LLM Agents

Evaluating Context Strategies for LLM Agents

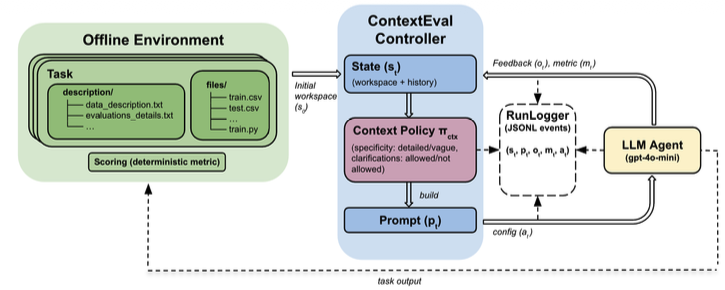

This capstone project at the University of California San Diego investigates how an LLM agent decides what information to carry forward, what to fetch, and what to ignore when completing a fixed, offline ML experimentation workflow. The core idea is that “context” is not just text length, it is a set of choices that shape what the agent pays attention to and how consistently it behaves. The project treats those choices as design decisions that can be made explicit, compared, and improved.

The work centers on building a repeatable way to test different context strategies in the same loop, under the same constraints. That includes variations like how much prior history to include, how to summarize or compress, how to structure key facts, and when to retrieve supporting documentation versus relying on what is already in the prompt. Each strategy is run through the same tasks so differences in outcomes can be attributed to the context approach rather than the task itself.

To make comparisons meaningful, the project emphasizes controlled experimentation and clear measurements. Runs are designed to be repeatable across seeds and small prompt changes, so the results reflect reliable behavior rather than one-off wins. The output is a set of findings about which context decisions produce more stable, trustworthy results in this workflow and which ones introduce noise or brittleness.

Next semester, the project will continue by expanding the scope of what “context strategy” can include and testing it under more varied conditions. That may mean adding new task types, introducing longer or messier documentation, or increasing the complexity of the agent’s tool use so the context policy has to work harder. The goal is to see whether the same principles hold when the agent faces more realistic variation.

The continuation will also focus on turning the work into a more usable framework: clearer guidelines for choosing a context strategy, stronger reporting, and a setup that others can reuse to compare approaches. Instead of only describing what happened in one setting, the project aims to produce a portable method for studying context decisions and a practical set of recommendations for building more dependable LLM agent workflows.

Stay Connected

Follow our journey on Medium and LinkedIn.