Judging the Judges: Using AI and Humans to Evaluate LLM Explanations

Evaluating Context Strategies for LLM Agents

This capstone project evaluates how the way we prompt an LLM changes the quality of its explanations for college-level technical concepts, and how well automated “LLM-as-judge” evaluations match real human preferences. It is motivated by the fact that explanations are used to build trust and support learning, but different users need different levels of depth and clarity.

The team builds a pipeline that first selects difficult concepts from domain glossaries and filters for terms that require abstract reasoning or cross-domain understanding. For each concept, the system generates explanations using multiple prompt templates that vary in constraint and structure, including a minimal baseline, a moderately structured “multi-aspect” prompt, and a heavily structured “multi-perspective” prompt.

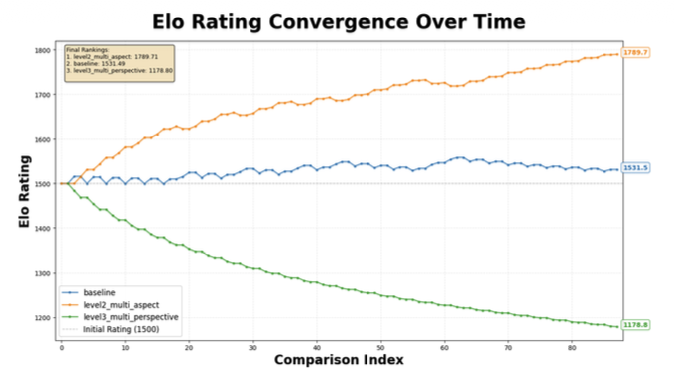

To score explanation quality, the project uses pairwise comparisons from an evaluator model instructed to judge which explanation a typical non-expert college student would prefer, with reverse-order checks to reduce ordering bias. Those pairwise wins, losses, and ties are aggregated into Elo ratings to produce a prompt leaderboard, and the Quarter 1 prototype ran on 30 concepts (90 explanations) with 540 pairwise judgments.

Quarter 1 results showed a clear separation between prompt strategies: the moderately structured multi-aspect prompt ranked highest (Elo 1789.71), the baseline was middle (1531.49), and the heavily structured multi-perspective prompt performed worst (1178.80). The analysis suggests that adding some scaffolding improves readability and usefulness, while too much structure can hurt clarity for non-experts.

Next quarter, the proposal is to scale the benchmark from the 30-concept pilot to a few hundred concepts, add systematic human rubric scoring (explainability, complexity, familiarity) to analyze where human and LLM-judge evaluations agree or diverge, and test critique-guided prompt rewriting to see if it measurably improves explanation quality. The quarter’s deliverables are a full research report plus an interactive website with leaderboards, example explanations, and disagreement visualizations, as the proposal for next quarter.

Stay Connected

Follow our journey on Medium and LinkedIn.