Real-Time Multi-Agent AI Debate system

Real-Time Multi-Agent AI Debate system

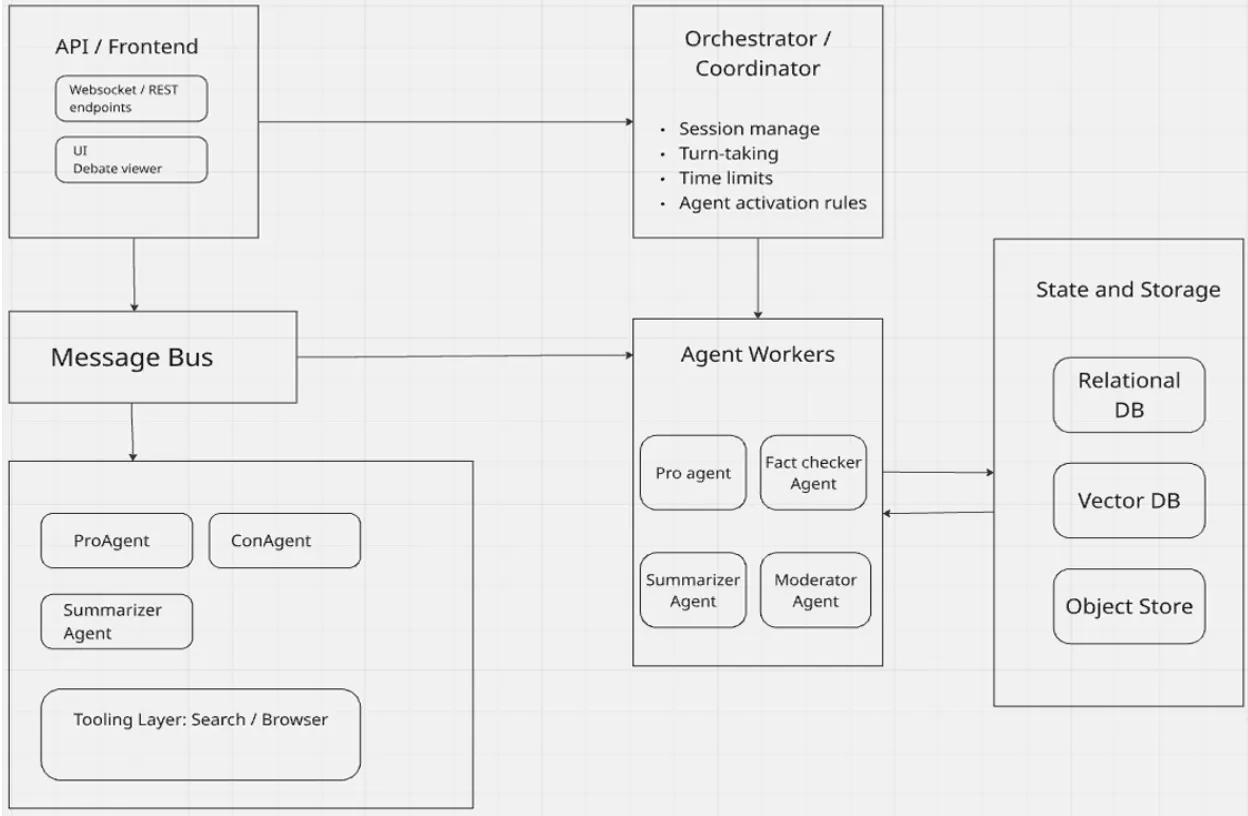

This project built a real-time, web-based multi-agent AI debate platform that makes model reasoning visible through structured argument. Instead of returning a single answer, the system runs a panel-style debate where agents challenge assumptions, introduce evidence, and respond across multiple rounds.

Users enter any topic and watch Pro and Con agents generate opening statements, rebuttals, and closings. A Judge agent scores each round on a 1–10 scale and explains the decision using a clear rubric, while the UI shows the current round, a live scoreboard, and a chat-style transcript as the debate unfolds.

Under the hood, a Flask backend orchestrates the debate flow through REST endpoints for starting a debate, generating arguments, judging, and completing an episode. Role-specific prompt templates guide each stage, and a prompt management panel allows changing debate style without editing code.

To ground arguments in facts, the platform includes a retrieval pipeline that ingests documents (including PDFs), chunks them, embeds them, and stores them in a vector database. At runtime, the system retrieves relevant snippets and packages them into a compact evidence set injected into the Pro and Con prompts, with citations surfaced in the UI.

Engineering effort focused on reliability and product readiness: parsing judge outputs when formats drift, managing context limits as debates grow, preventing repetition and topic drift across rounds, and keeping the UI responsive during long model calls. Debates are logged as structured data in a SQL database so users can reload past debates, audit decisions, and analyze outcomes over time.

Stay Connected

Follow our journey on Medium and LinkedIn.